Evaluation is the act of judging the value, merit, worth, or significance of things. Those “things” can be performances, programs, processes, policies, or products, to name a few. Evaluation is ubiquitous in everyday life. It is evident in annual performance appraisals, the assessment of a good golf swing, scholarly reviews of books, quality control procedures in manufacturing, and the ratings of products provided by agencies such as Consumer’s Union. Forensic evaluations by psychiatrists are a common aspect of many court proceedings; teachers evaluate the performance of their students; expert judges assess and score athlete performances in high school diving competitions; drama critics for local newspapers appraise the quality of local theatre productions; foundation officers examine their investments in social programs to determine whether they yield value for money. Evaluation is an organizational phenomenon, embedded in policies and practices of governments, in public institutions like schools and hospitals, as well as in corporations in the private sector and foundations in the philanthropic world. Evaluation and kindred notions of appraising, assessing, auditing, rating, ranking, and grading are social phenomena that all of us encounter at some time or another in life.

However, we can also identify what might be called a “professional approach” to evaluation that is linked to both an identity and a way of practicing that sets it apart from everyday evaluation undertakings. That professional approach is characterized by specialized, expert knowledge and skills possessed by individuals claiming the designation of “evaluator.” Expert knowledge is the product of a particular type of disciplined inquiry, an organized, scholarly, and professional mode of knowledge production. The prime institutional locations of the production of evaluation knowledge are universities, policy analysis and evaluation units of government departments or international organizations, and private research institutes and firms. The primary producers are academics, think tank experts, and other types of professionals engaged in the knowledge occupation known as “evaluation,” or perhaps more broadly “research and evaluation.”1 Evaluation is thus a professional undertaking that individuals with a specific kind of educational preparation claim to know a great deal about, on the one hand, and practice in systematic and disciplined ways, on the other hand. This knowledge base and the methodical and well-organized way of conducting evaluation sets the expert practice apart from what occurs in the normal course of evaluating activities in everyday life. Expert evaluation knowledge, particularly of the value of local, state, national, and international policies and programs concerned with the general social welfare (e.g., education, social services, public health, housing, criminal justice), is in high demand by governments, nongovernmental agencies, and philanthropic foundations.

FOCUS OF THE PRACTICE

The somewhat unfortunately awkward word used to describe the plans, programs, projects, policies, performances, designs, material goods, facilities, and other things that are evaluated is evaluands; a term of art that I try my best to avoid using. It is the evaluation of policies and programs (also often referred to as social interventions) that is of primary concern in this book. A program is a set of activities organized in such a way so as to achieve a particular goal or set of objectives. Programs can be narrow in scope and limited to a single site—for example, a health education program in a local community to reduce the incidence of smoking—or broad and unfolding across multiple sites such as a national pre-school education initiative that involves delivering a mix of social and educational services to preschool-aged children and their parents in several locations in major cities across the country. Policies are broader statements of an approach to a social problem or a course of action that often involve several different kinds of programs and activities. For example, the science, technology, engineering, and mathematics (STEM) education policy, supported by the U.S. National Science Foundation (NSF) (among other agencies), is aimed in part at increasing the participation of students in STEM careers, especially women and minorities. That aim is realized through a variety of different kinds of programs including teacher preparation programs; informal, out-of-school education programs; educational efforts attached to NSF-funded centers in science and engineering; and programs that provide research experience in STEM fields for undergraduates.

However, even if we restrict our focus to the evaluation of programs, there remains an incredible variety including training programs (e.g., job training to reduce unemployment; leadership development for youth programs); direct service interventions (e.g., early childhood education programs); indirect service interventions (e.g., a program providing funding to develop small- and medium-size business enterprises); research programs (e.g., a program aimed at catalyzing transdisciplinary research such as the U.S. National Academies of Science Keck Future’s Initiative); surveillance systems (e.g., the U.S. National Security Agency’s controversial electronic surveillance program known as PRISM or, perhaps in a less sinister way of thinking, a program that monitors health-related behaviors in a community in order to improve some particular health outcome); knowledge management programs; technical assistance programs; social marketing campaigns (e.g., a public health program designed to encourage people to stop smoking); programs that develop advocacy coalitions for policy change; international development programs that can focus on a wide variety of interventions in health, education, food safety, economic development, the environment, and building civil society; strategy evaluation;2 and programs that build infrastructure in or across organizations at state or local levels to support particular educational or social initiatives.

AIMS OF THE PRACTICE

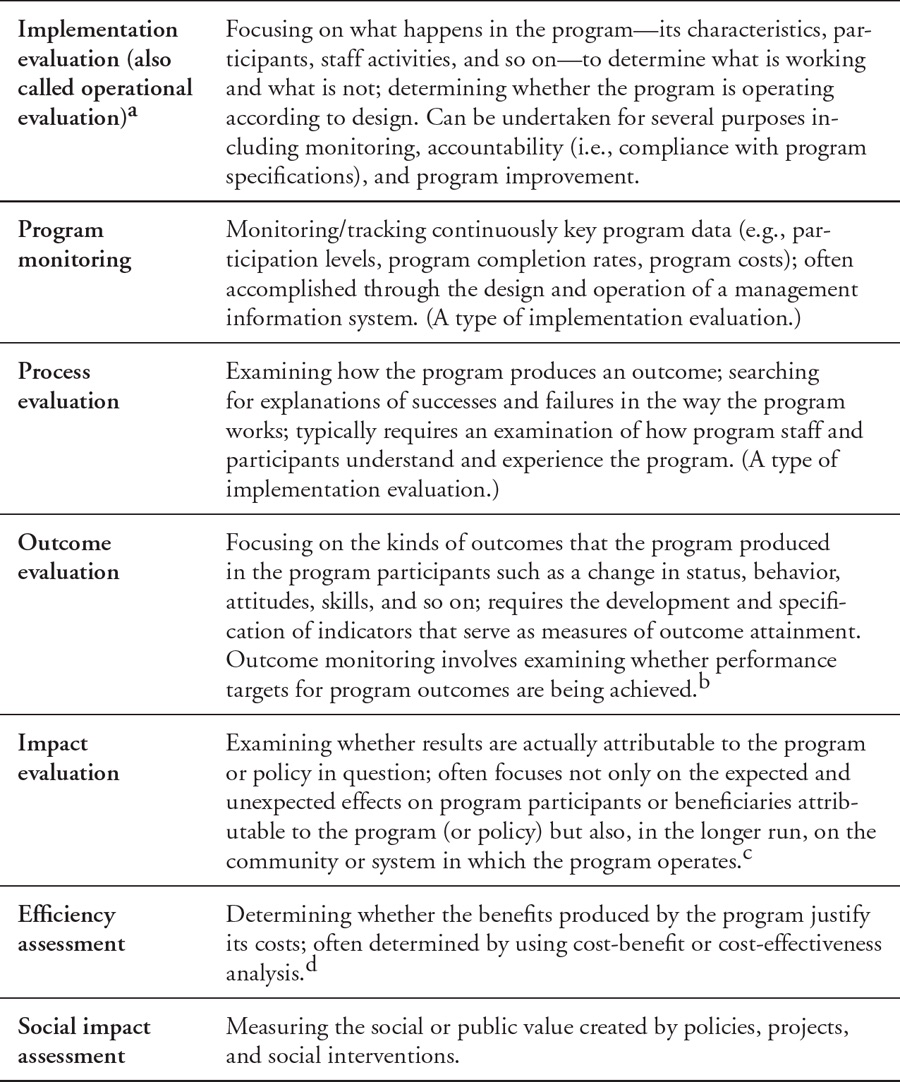

Evaluation is broadly concerned with quality of implementation, goal attainment, effectiveness, outcomes, impact, and costs of programs and policies.3 Evaluators provide judgments on these matters to stakeholders who are both proximate and distant. Proximate stakeholders include clients sponsoring or commissioning an evaluation study as well program developers, managers, and beneficiaries. Distant stakeholders can include legislators, the general public, influential thought leaders, the media, and so on. Moreover, policies and programs can be evaluated at different times in their development and for different purposes as shown in Table 1.

Table 1. Types of Evaluation

a See Khandker, Koolwal, and Samad (2010: 16–18).

b The literature is not in agreement on the definitions of outcome and impact evaluation. Some evaluators treat them as virtually the same, others argue that outcome evaluation is specifically concerned with immediate changes occurring in recipients of the program while impact examines longer-term changes in participants’ lives.

c Khandker, Koolwal, and Samad (2010); see also the InterAction website for its Impact Evaluation Guidance Notes at http://www.interaction.org/impact-evaluation-notes

d Levin and McEwan (2001).

SCOPE OF THE PRACTICE

Evaluation of policies and programs in the United States is an important part of the work of legislative agencies and executive departments including the Government Accountability Office, the Office of Management and Budget, the Centers for Disease Control and Prevention, the Departments of State, Education, and Labor, the National Science Foundation, the U.S. Cooperative Extension Service, the Office of Evaluation and Inspections in the Office of the Inspector General in the Department of Health and Human Services, and the Office of Justice Programs in the U.S. Department of Justice. At state and local levels evaluations are undertaken by legislative audit offices, as well as by education, social service, public health, and criminal justice agencies (often in response to federal evaluation requirements).

Professional evaluation of programs and policies is also an extensive global undertaking. Governments throughout the world have established national-level evaluation offices dealing with specific public sector concerns such as the Danish Evaluation Institute charged with examining the quality of day care centers, schools, and educational programs throughout the country or the Agency for Health Care Research and Quality in the U.S. Department of Health and Human Services that investigates the quality, efficiency, and effectiveness of health care for Americans. At the national level, one also finds evaluation agencies charged with oversight for all public policy such as the Spanish Agency for the Evaluation of Public Policies and Quality of Services and the South African Department of Performance Monitoring and Evaluation. These omnibus agencies not only determine the outcomes and impacts of government policies but in so doing combine the goals of improving the quality of public services, rationalizing the use of public funds, and enhancing the public accountability of government bodies.

Evaluations are both commissioned and conducted at think tanks and at not-for-profit and for-profit institutions such as the Brookings Institution and the Urban Institute, the Education Development Center, WestEd, American Institutes of Research, Weststat, and the International Initiative for Impact Evaluation (3ie). Significant evaluation work is undertaken or supported by philanthropies such as the Bill & Melinda Gates Foundation and the Rockefeller Foundation. Internationally, evaluation is central to the work of multilateral organizations including the World Bank, the Organisation for Economic Co-operation and Development (OECD), the United Nations Educational, Scientific, and Cultural Organization (UNESCO), and the United Nations Development Programme (UNDP); as well as government agencies around the world concerned with international development such as the U.S. Agency for International Development (USAID), the International Development Research Centre in Canada (IDRC), the Department for International Development (DFID) in the United Kingdom, and the Science for Global Development Division of the Netherlands Organization for Scientific Research (NWO-WOTRO).

The scope and extent of professional evaluation activity is evident from the fact that twenty academic journals4 are devoted exclusively to the field, and there are approximately 140 national, regional, and international evaluation associations and societies.5 This worldwide evaluation enterprise is also loosely coupled to the related work of professionals who do policy analysis, performance measurement, inspections, accreditation, quality assurance, testing and assessment, organizational consulting, and program auditing.

CHARACTERISTICS OF THE PRACTICE AND ITS PRACTITIONERS

The professional practice of evaluation displays a number of interesting traits, not least of which is whether it actually qualifies as a profession—a topic taken up later in this book. Perhaps its most thought-provoking trait is that it is heterogeneous in multiple ways. Evaluators examine policies and programs that vary considerably in scope from a local community-based program to national and international efforts spread across many sites. They evaluate a wide range of human endeavors reflected in programs to stop the spread of HIV/AIDS, to improve infant nutrition, to determine the effectiveness of national science policy, to judge the effectiveness of education programs, and more. This diversity in focus and scope of evaluation work is accompanied by considerable variation in methods and methodologies including the use of surveys, field observations, interviewing, econometric methods, field experiments, cost-benefit analysis, and network and geospatial analysis. For its foundational ideas (for example, the meaning of evaluation, the role of evaluation in society, the notion of building evaluation capacity in organizations), its understanding of the fields in which it operates, and many of its methods, evaluation draws on other disciplines.

The individuals who make up the community of professional practitioners are a diverse lot as well. For some, the practice of evaluation is a specialty taken up by academic researchers within a particular field like economics, sociology, education, nursing, public health, or applied psychology. For others, it is a professional career pursued as privately employed or as an employee of a government, not-for-profit or for-profit agency. There are three broad types of evaluation professionals: (1) experienced evaluators who have been practicing for some time either as privately employed, as an employee of a research organization or local, state, federal, or international agency, or as an academic; (2) novices entering the field of program evaluation for the first time seeking to develop knowledge and skills; and (3) “accidental evaluators,” that is, people without training who have been given responsibility for conducting evaluations as part of their portfolio of responsibilities and who are trying to sort out exactly what will be necessary to do the job.6

PREPARATION FOR THE PRACTICE

With only two exceptions (evaluation practice in Canada and Japan), at the present time there are no formal credentialing or certification requirements to become an evaluator. Some individuals receive formal training and education in evaluation in master’s and doctoral degree programs throughout the U.S. and elsewhere in the world.7 However, at the doctoral level, evaluation is rarely, if ever, taught as a formal academic field in its own right but as a subfield or specialty located and taught within the concepts, frameworks, methods, and theories of fields such as sociology, economics, education, social work, human resource education, public policy, management, and organizational psychology.

Perhaps the most significant sources of preparation for many who are “accidental evaluators,” or those just learning about the practice while employed in some other professional capacity, are short courses, training institutes, workshops, and webinars often sponsored by professional evaluation associations; certificate programs offered by universities in online or traditional classroom settings;8 and “toolkits” prepared by agencies and available on the Internet.9 The rapid development and expansion of these types of training resources are, in part, a response to the demand from practitioners in various social service, educational, and public health occupations to learn the basics of evaluation models and techniques because doing an evaluation has become one of their job responsibilities (perhaps permanently but more often only temporarily).10

This trend to “train up” new evaluators is also consistent with broader cultural efforts to scientize and rationalize the professional practices of education, health care, and social services by focusing directly on measurable performance, outcomes, and the development of an evidence base of best practices. In this climate, evaluators function as technicians who, when equipped with tools for doing results-based management, program monitoring, performance assessment, and impact evaluation, provide reliable evidence of outcomes and contribute to the establishment of this evidence base. However, while providing evidence for accountability and decision making is certainly an important undertaking, the danger in this way of viewing the practice of evaluation is that assurance is substituted for valuation. Evaluation comes to be seen for the most part as one of the technologies needed for assuring effective and efficient management and delivery of programs as well as for documenting achievement of targets and expected outcomes.11 The idea that evaluation is a form of critical appraisal concerned with judging value begins to fade from concern.

At worst, this trend to narrowly train contributes to the erosion of the ideal of evaluation as a form of social trusteeship, whereby a professional’s work contributes to the public good, and its replacement with the notion of technical professionalism, where the professional is little more than a supplier of expert services to willing buyers.12 Focusing almost exclusively on efforts to train individuals to do evaluation can readily lead to a divorce of technique from the calling that brought forth the professional field in the first place. Furthermore, while learning about evaluation approaches along with acquiring technical skills in designing and conducting evaluation are surely essential to being a competent practitioner, a primary focus on training in models and methods (whether intending to do so or not) can create the impression that evaluation primarily requires only “knowing how.”

1. Stone (2001: 1).

2. This is a unique form of policy evaluation where the unit of analysis is agency or organizational strategy as opposed to a single program or policy; see Patrizi and Patton (2010). The evaluation examines an organization’s strategic perspective (how the organization thinks about itself and whether within the organization there is a consistent view) and its strategic position (where it aims to have effect and contribute to outcomes) and how well aligned understandings of this perspective are across different constituencies of the organization including its leadership, staff, funders, partners, and so on. The strategy to be evaluated could well be a particular organization’s own evaluation strategy.

3. Theodoulou and Kofinis (2003).

4. African Evaluation Journal; American Journal of Evaluation; Canadian Journal of Program Evaluation; Educational Evaluation and Policy Analysis; Educational Research and Evaluation; Evaluation and the Health Professions; Evaluation Journal of Australasia; Evaluation and Program Planning; Evaluation Review; Evaluation: The International Journal of Theory, Research and Practice; International Journal of Evaluation and Research in Education; International Journal of Educational Evaluation for Health Professions; Japanese Journal of Evaluation Studies; Journal of MultiDisciplinary Evaluation; LeGes (journal of the Swiss Evaluation Society); New Directions for Evaluation; Practical Assessment, Research, and Evaluation; Studies in Educational Evaluation; Zeitschrift für Evaluation. Of course, papers on evaluation are also published in discipline-specific journals, e.g., Journal of Community Psychology, American Journal of Public Health, and the Journal of Policy Analysis and Management.

5. See the list of evaluation organizations at the web site of the International Organisation for Cooperation in Evaluation (IOCE) at http://www.ioce.net/en/index.php. See also Rugh (2013).

6. Stevahn, King, Ghere, and Minnema (2005: 46).

7. See the list of U.S. universities offering master’s degrees, doctoral degrees, and/or certificates in evaluation at http://www.eval.org/p/cm/ld/fid=43. There are professional master’s programs in evaluation in Austria, Germany, Denmark, and Spain.

8. For example, Auburn University, Tufts University, University of Minnesota, and the Evaluator’s Institute at George Washington University.

9. At this writing, entering the phrase “evaluation toolkit” in a Google search yielded almost 1.4 million hits and included toolkits prepared by a wide variety of actors in field—the World Bank, The Centers for Disease Control and Prevention, FSG (a consulting firm); the Global Fund to Fight AIDS, Malaria, and Tuberculosis; the Coalition for Community Schools; the W.K. Kellogg Foundation; and the Agency for Healthcare Research and Quality (AHRQ) National Resource Center, to name but a few.

10. Indirect evidence of the growing significance of these training resources for preparing evaluators comes from trends in the composition of the membership of the American Evaluation Association—the largest evaluation membership organization in the world, with approximately 7,800 members. Studies of the membership in recent years have revealed that practitioner and “accidental” pathways into the profession are four times more prevalent than “academic” pathways. Moreover, the association reports that only 31% of its membership identifies as college or university affiliated.

11. Schwandt (2008).

12. Sullivan (2004).